Drag-based Image Editing Emerging from Videos

Abstract

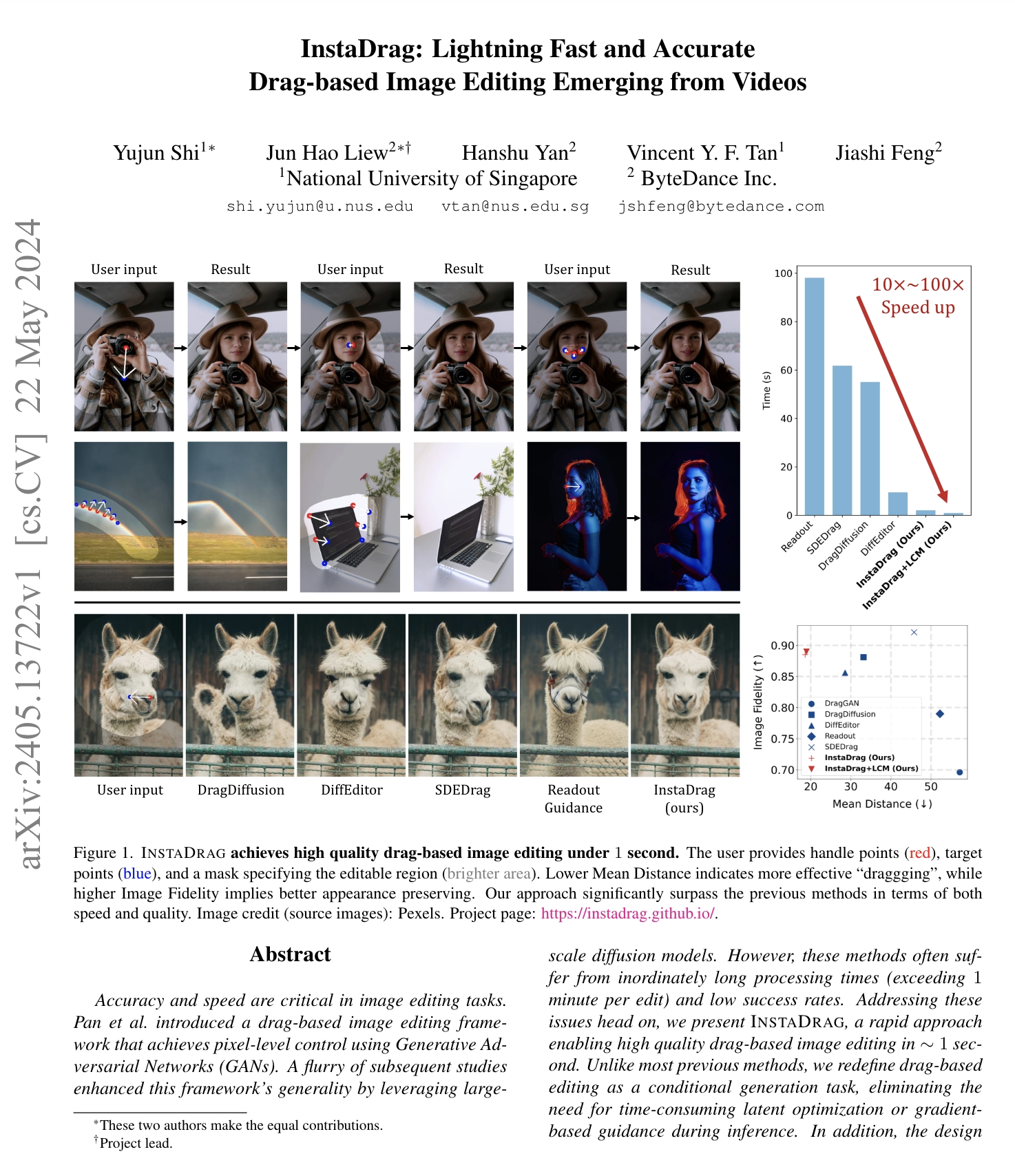

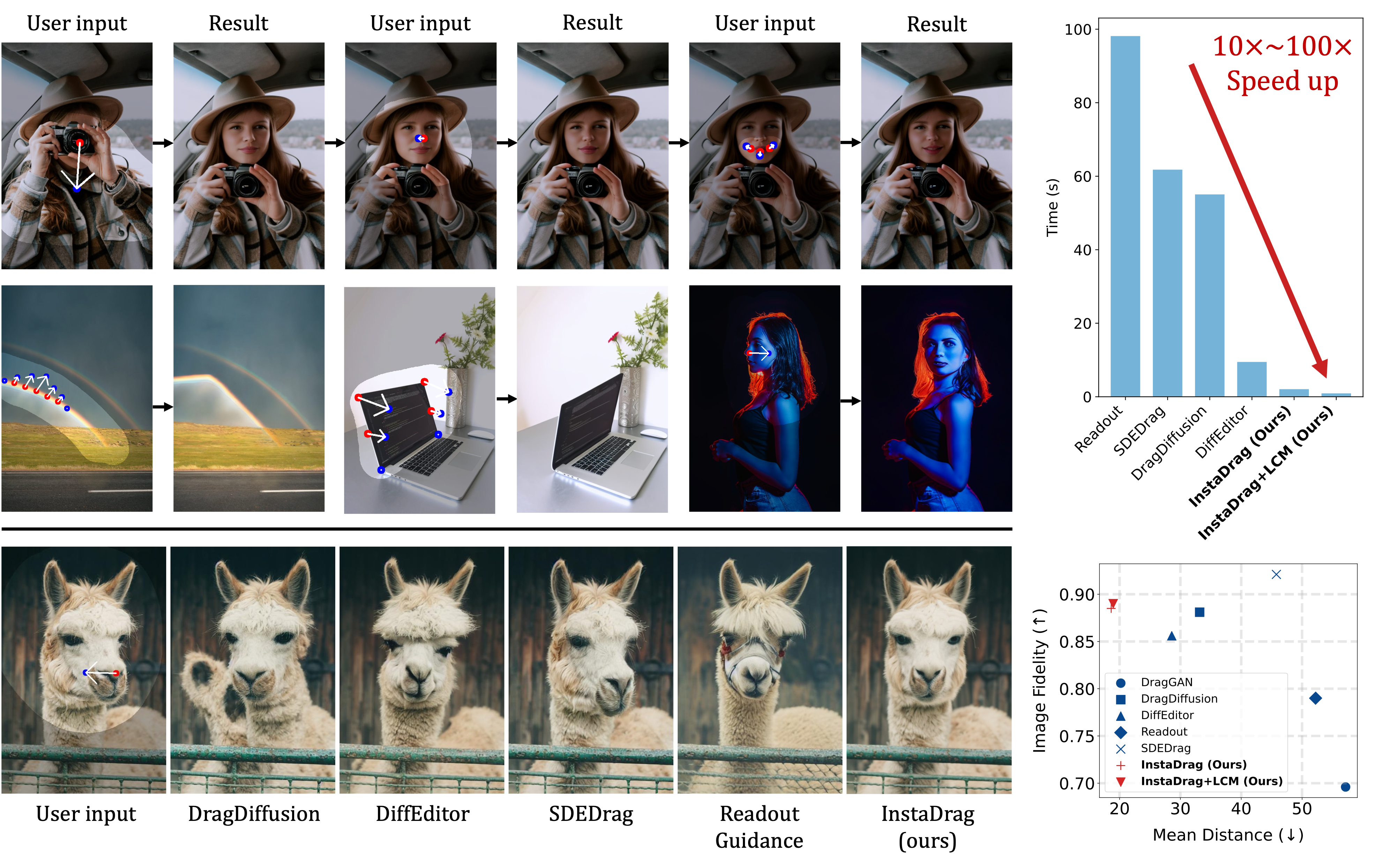

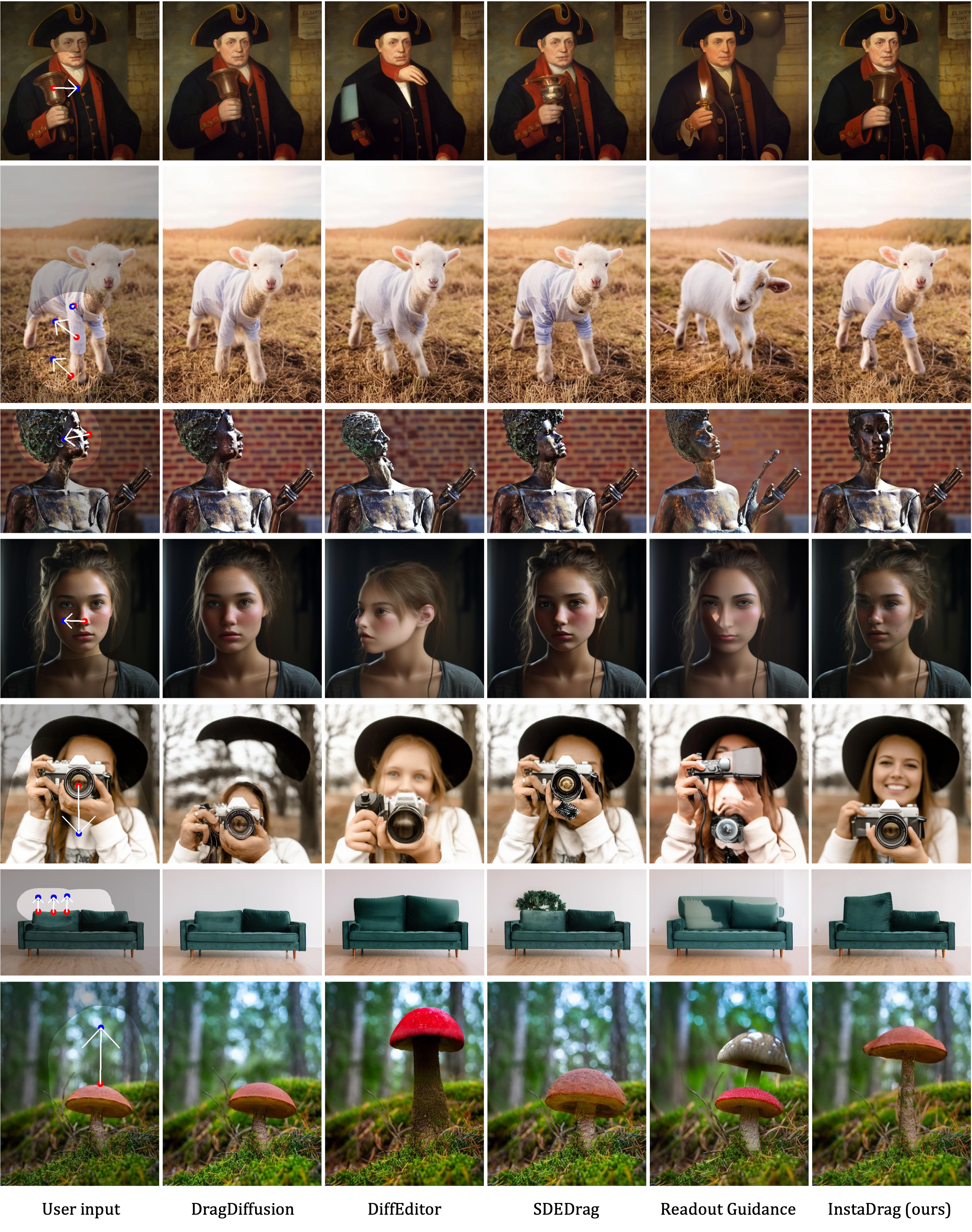

Accuracy and speed are critical in image editing tasks. Pan et al. introduced a drag-based image editing framework that achieves pixel-level control using Generative Adversarial Networks (GANs). A flurry of subsequent studies enhanced this framework's generality by leveraging large-scale diffusion models. However, these methods often suffer from inordinately long processing times (exceeding 1 minute per edit) and low success rates. Addressing these issues head on, we present InstaDrag, a rapid approach enabling high quality drag-based image editing in ~1 second. Unlike most previous methods, we redefine drag-based editing as a conditional generation task, eliminating the need for time-consuming latent optimization or gradient-based guidance during inference. In addition, the design of our pipeline allows us to train our model on large-scale paired video frames, which contain rich motion information such as object translations, changing poses and orientations, zooming in and out, etc. By learning from videos, our approach can significantly outperform previous methods in terms of accuracy and consistency. Despite being trained solely on videos, our model generalizes well to perform local shape deformations not presented in the training data (e.g., lengthening of hair, twisting rainbows, etc.). Extensive qualitative and quantitative evaluations on benchmark datasets corroborate the superiority of our approach. The code and model will be released.

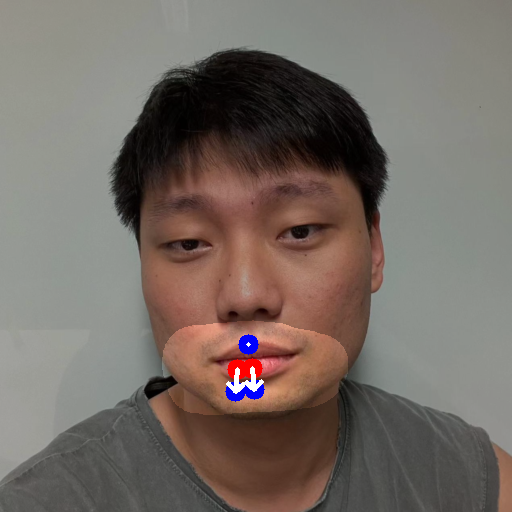

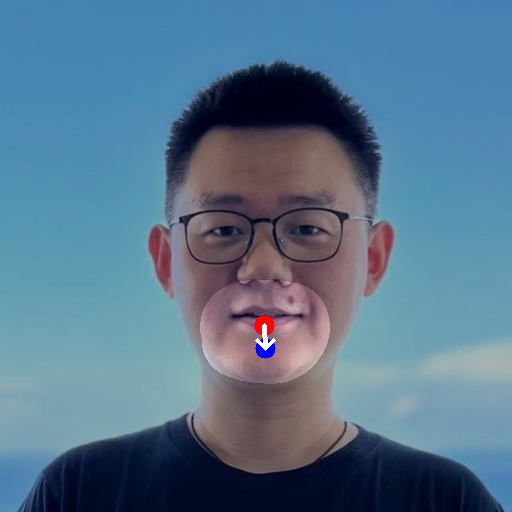

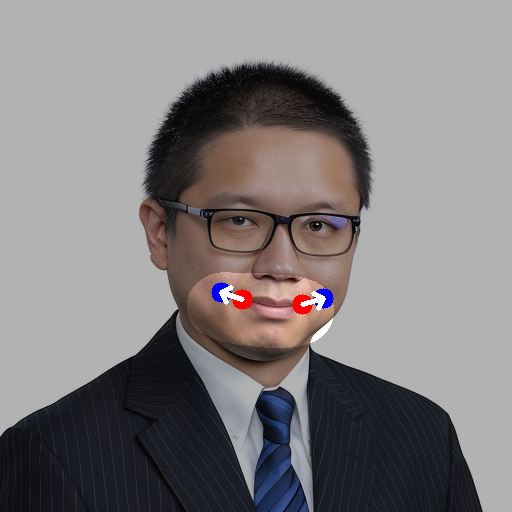

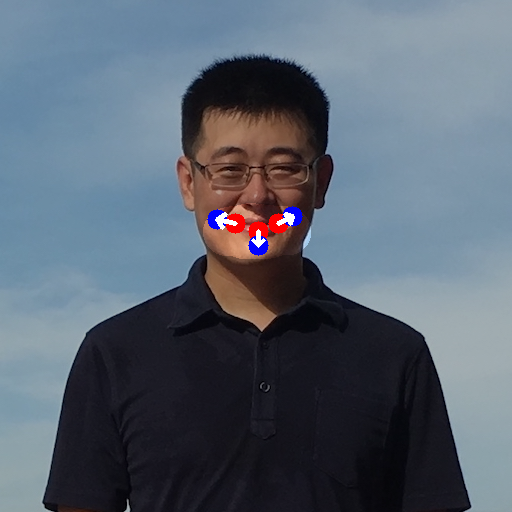

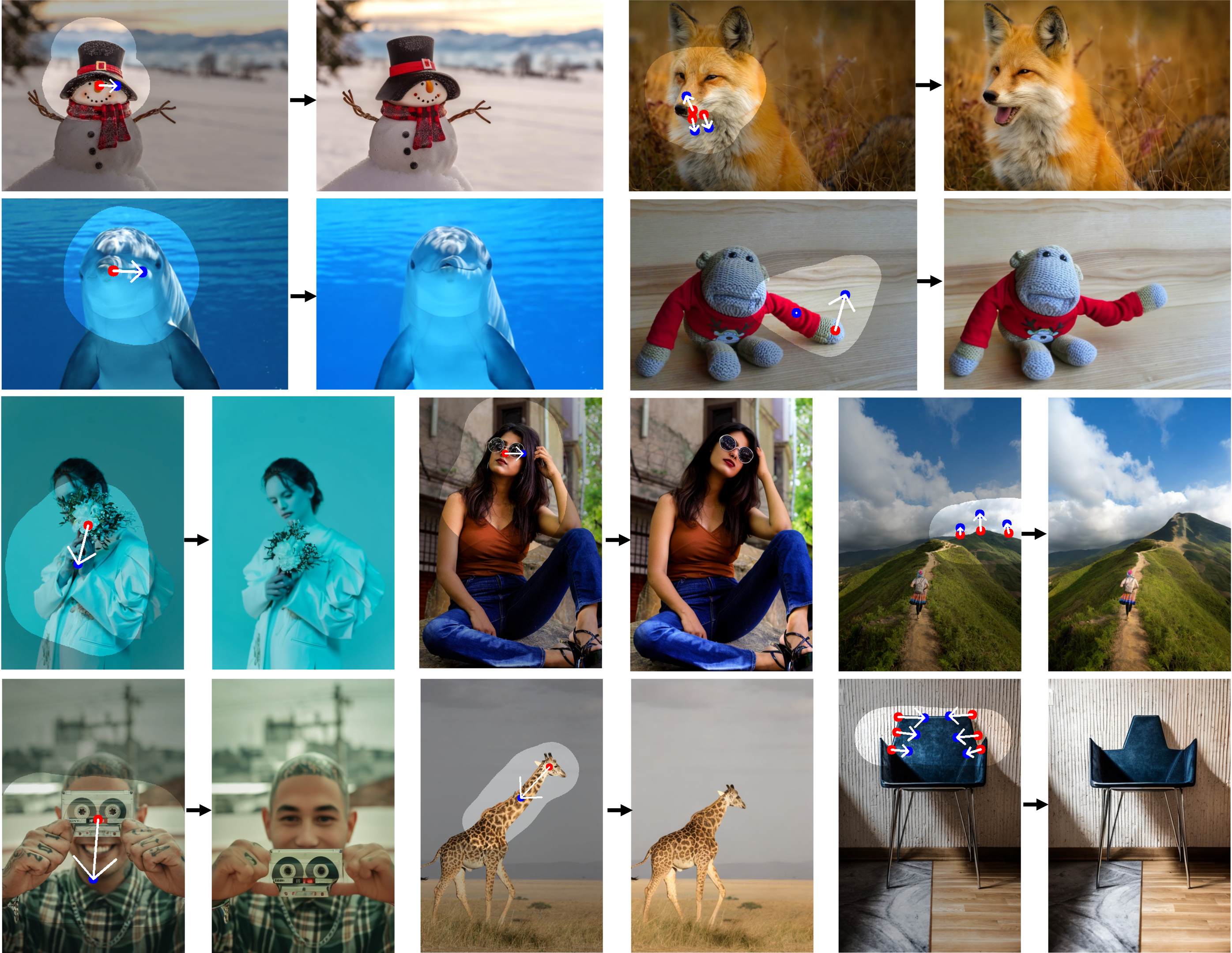

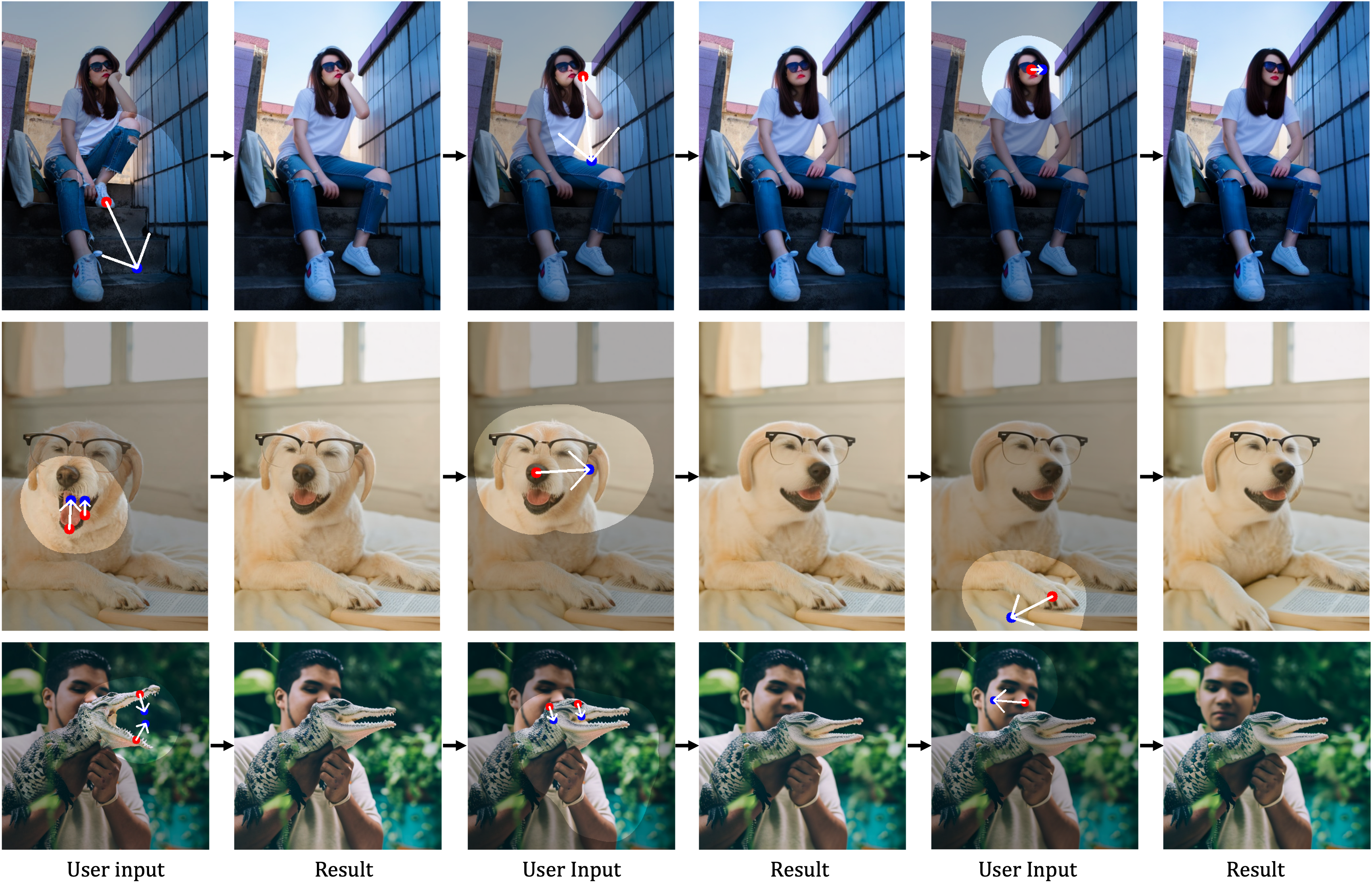

The user provides handle points (red), target points (blue), and a mask specifying the editable region (brighter area). Lower Mean Distance indicates more effective "draggging", while higher Image Fidelity implies better appearance preserving. Our approach significantly surpass the previous methods in terms of both speed and quality. Image credit (source images): Pexels.

Live Demo

More Qualitative Results

Comparisons with Prior Methods

Single-Round Dragging

Multi-Round Dragging

Paper

InstaDrag: Lightning Fast and Accurate Drag-based Image Editing Emerging from Videos

Yujun Shi, Jun Hao Liew, Hanshu Yan, Vincent Y. F. Tan, Jiashi Feng

arXiv, 2024.

@article{shi2024instadrag,

title={InstaDrag: Lightning Fast and Accurate Drag-based Image Editing Emerging from Videos},

author={Shi, Yujun and Liew, Jun Hao, and Yan, Hanshu and Tan, Vincent YF and Feng, Jiashi},

journal={arXiv preprint arXiv:2405.13722},

year={2024}

}

Acknowledgements

This template was originally made by Phillip Isola and Richard Zhang for a colorful project, and inherits the modifications made by Jason Zhang and Elliott Wu.